CloudWatch: Maximize Value and Minimize Costs

LogicMonitor + Catchpoint: Enter the New Era of Autonomous IT

Proactively manage modern hybrid environments with predictive insights, intelligent automation, and full-stack observability.

Explore solutionsExplore our resource library for IT pros. Get expert guides, observability strategies, and real-world insights to power smarter, AI-driven operations.

Explore resources

Our observability platform proactively delivers the insights and automation CIOs need to accelerate innovation.

About LogicMonitor

Amazon Web Services are great for quickly enabling agile infrastructure – but this can lead to difficulty with cost control or predictability. Unfortunately, monitoring of AWS resources can exacerbate this problem of unpredictable costs, as the very act of querying AWS data can be more expensive than the cost of the monitoring service.

Any AWS monitoring tool worth its salt will pull in data via the CloudWatch API, because for certain resources, it is the only data available. However, AWS charges for requests to this API, which means that AWS monitoring will come with associated CloudWatch costs – and the API costs alone can be expensive if you don’t pay attention to how your monitoring is done.

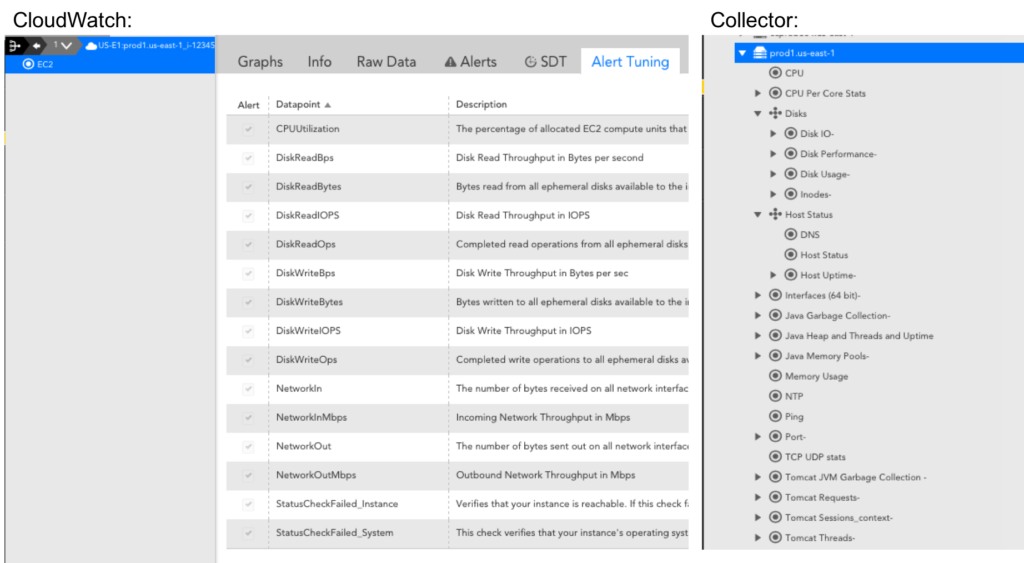

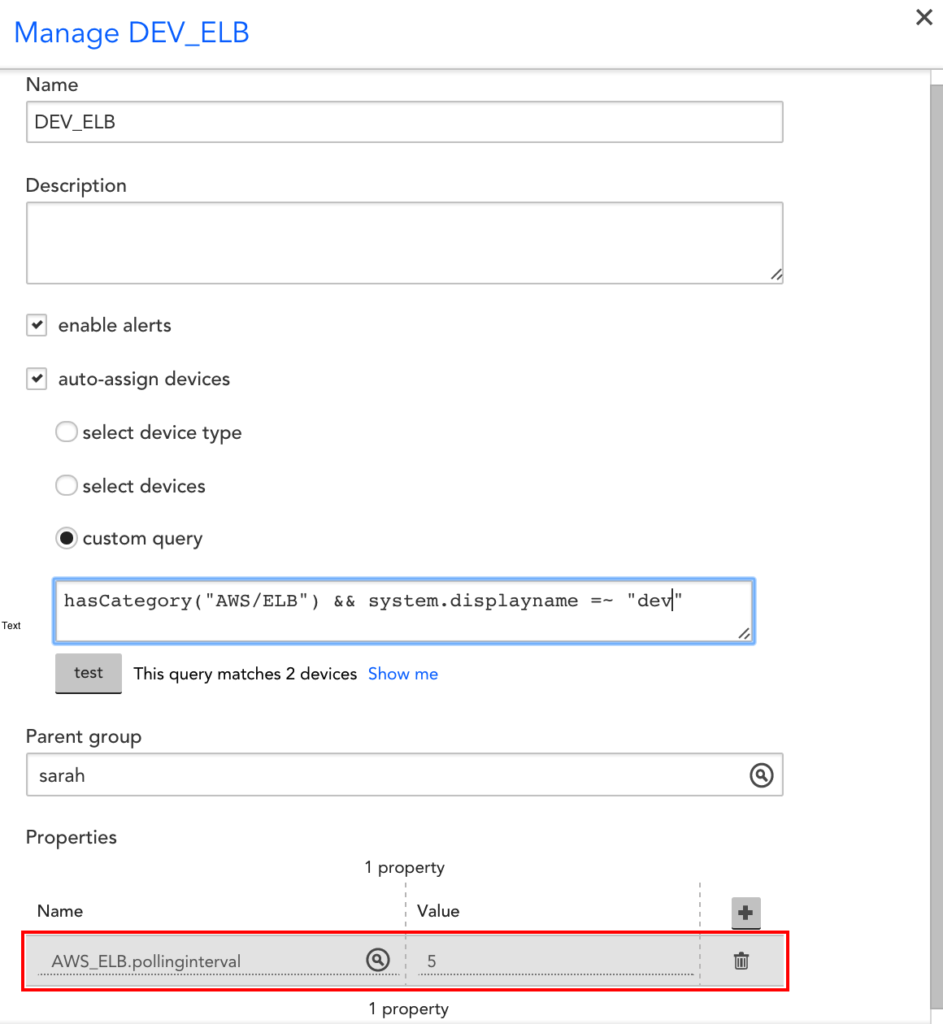

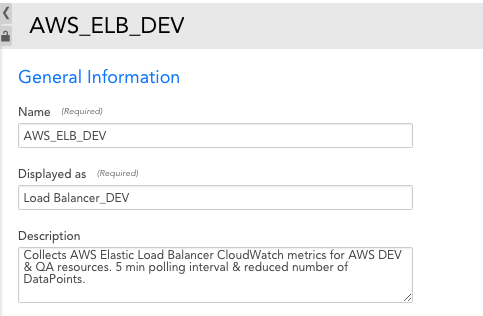

To avoid blowing your budget on CloudWatch costs alone, it is important to ensure that your monitoring tool requests CloudWatch data as efficiently as possible. Similarly, you should make sure that your monitoring tool gives you the flexibility to adjust how CloudWatch data is requested, so that you have the option of optimizing it for your environment. With LogicMonitor, Collector-based monitoring and flexible polling intervals make it easy to minimize your CloudWatch costs while still maximizing the value of your monitored data.

AWS requests to the CloudWatch API are restricted to one request per metric. This means that ultimately, CloudWatch cost for monitoring is directly proportional to the number of metrics monitored. Each AWS Service exposes a different number of metrics via the CloudWatch API, so the cost will vary across these services. For example, Elasticsearch publishes 12 metrics to CloudWatch by default, while Lambda only publishes 4. Assuming the same request frequency, this makes Elasticsearch 3 times as expensive to monitor as Lambda, if you monitor all the metrics possible.

CloudWatch cost is also directly proportional to the frequency of requests. While requesting more data at less frequent intervals is possible, it can prevent real time alert evaluation, reducing the value of the monitored data. Based on their purpose, certain services warrant more frequent data collection, like ELB, while longer collection intervals are acceptable for other services, like S3. LogicMonitor’s AWS DataSources have default collection intervals set with this in mind.

Given that CloudWatch API requests cost $.01 per 1000 requests, we can actually calculate the prices for different services, based on the datapoints and collection intervals in the default LogicMonitor AWS Datasources. To illustrate the point, here are the three most and least expensive services to monitor, respectively:

Top three:

ElastiCache: $10.35 per Memcached cluster/ 30 days, $9.50 per Redis cluster/ 30 days

RDS: $6.90 per RDS instance/ 30 days

Auto Scaling (aggregate & group metrics): $6.50 per Auto Scaling group/ 30 days

Bottom three:

Lambda: $.35 per Lambda function/ 30 days

SNS: $.34 per SNS topic/ 30 days

S3: $.02 per S3 bucket/ 30 days

Given the above information, here is how LogicMonitor can help you get the most bang for your buck:

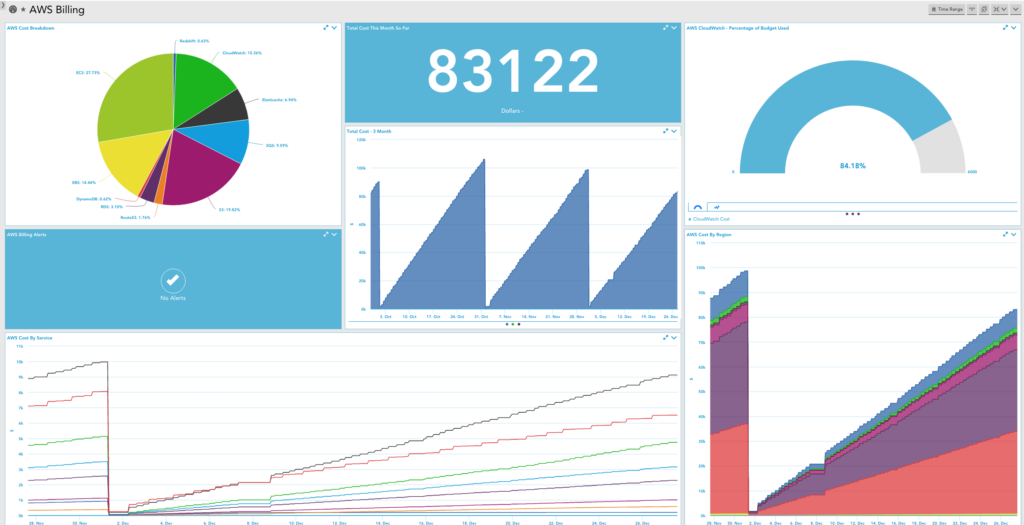

In addition to optimizing your AWS monitoring to minimize your bill, we recommend that you monitor AWS billing data itself in LogicMonitor. You should use the AWS billing per region, service and tag Datasources in LogicMonitor, and set thresholds for billing metrics such that you will receive alerts for excessive and/or unusual billing values. Consider setting up a dashboard or report that you can review on a daily or weekly basis. This will enable you to react to and better manage your CloudWatch expenses, so you ensure that you’re minimizing your spend while maintaining the value of monitored performance data for your AWS environment.

While retrieving data from CloudWatch for alerting and long term trends is great – make sure your monitoring tool doesn’t eat your whole cloud budget with unnecessary API calls!

© LogicMonitor 2026 | All rights reserved. | All trademarks, trade names, service marks, and logos referenced herein belong to their respective companies.