The traditional data center is undergoing a dramatic transformation. As artificial intelligence reshapes industries from healthcare to financial services, it’s not just the applications that are changing—the very infrastructure powering these innovations requires a fundamental rethinking.

Today’s data center bears little resemblance to the server rooms of the past. The world is seeing a convergence of high-density computing, specialized networks, and hybrid architectures designed specifically to handle the demands of AI workloads.

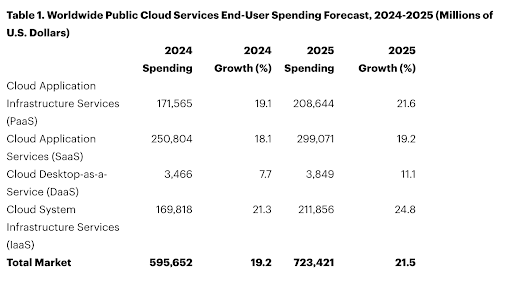

Source: Gartner (November 2024)

This transformation comes at a critical time. With analyst projections indicating that over 90% of organizations will adopt hybrid cloud by 2027, CIOs face mounting pressure to balance innovation with operational stability. AI workloads demand unprecedented computing power, driving a surge in data center capacity requirements and forcing organizations to rethink their approach to sustainability, cost management, and infrastructure design.

The New Data Center Architecture

At the heart of this evolution is a more complex and distributed infrastructure. Modern data centers span public clouds, private environments, edge locations, and on-premises hardware–all orchestrated to support increasingly sophisticated AI applications.

The technical requirements are substantial. High-density GPU clusters, previously the domain of scientific computing, are becoming standard components. These systems require specialized cooling solutions and power distribution units to manage thermal output effectively. Storage systems must deliver microsecond-level access to massive datasets, while networks need to handle the increased traffic between distributed components.

This distributed architecture necessarily creates hybrid environments where workloads and resources are spread across multiple locations and platforms. While this hybrid approach provides the flexibility and scale needed for AI operations, it introduces inherent challenges in resource orchestration, performance monitoring, and maintaining consistent service levels across different environments. Organizations must now manage not just individual components but the complex interactions between on-premises infrastructure, cloud services, and edge computing resources.

The Kubernetes Factor in Modern Data Centers

Container orchestration, particularly through Kubernetes (K8s), has emerged as a crucial element in managing AI workloads. Containerization provides the agility needed to scale AI applications effectively, but it also introduces new monitoring challenges as containers spin up and down rapidly across different environments.

The dynamic nature of containerized AI workloads adds complexity to resource management. Organizations must track GPU allocation, memory usage, and compute resources across multiple clusters while ensuring optimal performance. This complexity multiplies in hybrid environments, where containers may run on-premises one day and in the cloud the next, making maintaining visibility across the entire container ecosystem critical.

As containerized AI applications become central to business operations, organizations need granular insights into both performance and cost implications. Understanding the resource consumption of specific AI workloads helps teams optimize container placement and resource allocation, directly impacting both operational costs and energy efficiency.

Balancing Cost and Sustainability

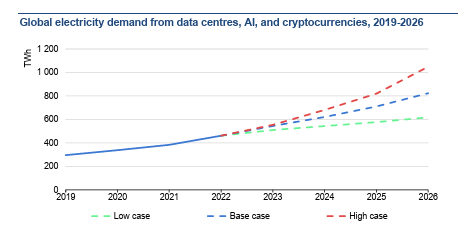

Perhaps the most pressing challenge for CIOs is managing the environmental and financial impact of these high-powered environments. Data centers (with cryptocurrencies and AI) consumed about 460 TWh of electricity worldwide in 2022, almost 2% of total global electricity demand. This consumption could more than double by 2026, largely driven by increasing AI workloads.

Sources: Joule (2023), de Vries, The growing energy footprint of AI; CCRI Indices (carbon-ratings.com); The Guardian, Use of AI to reduce data centre energy use; Motors in data centres; The Royal Society, The future of computing beyond Moore’s Law; Ireland Central Statistics Office, Data Centres electricity consumption 2022; and Danish Energy Agency, Denmark’s energy and climate outlook 2018.

Leading organizations are adopting sophisticated approaches to resource optimization. This includes:

- Dynamic workload distribution between on-premises and cloud environments

- Automated resource scaling based on actual usage patterns

- Implementation of energy-efficient cooling solutions

- Real-time monitoring of power usage effectiveness

These optimization strategies, while essential, require comprehensive visibility across the entire infrastructure stack to be truly effective.

Hybrid Observability in the Age of the Modern Data Center

As AI workloads become more complex, the next frontier in data center evolution is comprehensive, hybrid observability. Traditional monitoring approaches struggle to provide visibility across hybrid environments, especially when managing resource-intensive AI applications.

Leading enterprises are increasingly turning to AI-powered observability platforms that can integrate data from thousands of sources across on-premises, cloud, and containerized environments.

LogicMonitor Envision is one platform that has proven its value in this new reality. Syngenta, a global agricultural technology company, reduced alert noise by 90% after implementing LM Envision and Edwin AI, the first agentic AI built for IT. The platform allowed their IT teams to shift from reactive troubleshooting to strategic initiatives. This transformation is becoming essential as organizations balance multiple priorities:

- Managing AI workload performance across hybrid environments

- Optimizing resource allocation to control costs

- Meeting sustainability goals through efficient resource utilization

- Supporting continuous innovation while maintaining reliability

These interconnected challenges demand more than traditional monitoring capabilities—they require a comprehensive approach to infrastructure visibility and control.

The Strategic Imperative for Modern Data Centers

The message for CIOs is clear: as data centers evolve to support AI initiatives, full-stack observability becomes more than a monitoring tool. It’s a strategic imperative. Organizations need a partner who can deliver actionable insights at scale, helping them navigate the complexity of modern infrastructure while accelerating their digital transformation journey.

Subscribe to our blog

Get articles like this delivered straight to your inbox