Seriously, I said that to myself. In all reality I had a basic understanding of the concept of CPU load on a Linux server, but there were a few things I learned recently and I figured I’d share with the world.

I think everyone knows that if you run the top command you can get the 1 min 5 min and 15 min CPU load average for the box. Who cares though? It’s obvious to me I want to know about CPU percent used because in my mind that tells me how much CPU resources are being utilized. But what is this CPU load average metric?

For those of you who know all about CPU load average, now is when you might want to skip ahead to the portion of the blog where I give my philosophical opinion about if load average is useful. For the rest of you, let’s briefly discuss what exactly CPU load average really is.

CPU load is the number of processes that are using, or want to use, CPU time, or queued up processes ready to use CPU. This can also be referred to as the run queue length. Let’s say for example you have 1 CPU with 1 core. If you have a load average of 1 that would mean you are at capacity and anything more than that would start to queue. If you have an average CPU load of .5 that would mean you are at half capacity giving you room for more load. But Cevin, I don’t have 1 CPU, I have 2 CPU’s. Good point! Who only has 1 CPU these days? Not me, that’s for sure! So what would be the load average number that would cause 2 CPU’s to be at capacity? It depends.

How many cores does each CPU have? Each core can handle a load average of 1. That means that your core is at capacity (meaning there is one process running on the core, and nothing queued up waiting for it.) Think of 1 as being 100% capacity, so if you have 2 physical CPU’s and each of them have 4 cores then in grand total you have 8 cores. Duh, right? Here’s the point, if you have 8 cores then you can have an average CPU load of 8 and that would put you at capacity. In this scenario, anything less than 8 would put you under capacity and anything over 8 would put you in the queue.

Another issue – load that is greater than your number of cores tells you that processes are queuing – but it tells you nothing about the type of work being queued. Some of the jobs queued may have very high CPU requirements and take a long time to run, and others may schedule a request and then cede the CPU. It’s like saying “there are 100 vehicles queued at the freeway on ramp.” It makes a big difference if those 100 vehicles are zippy motorbikes, or giant semi-trailers. So it’s possible to have a high load, and low CPU utilization.

Are we clear on CPU load now? Awesome!

Now to the second part of the question, “why do I care?”. This is in my opinion the most important part of the question. Here is the answer. Are you ready?…

wait for it….

wait for it…

It depends!

It really depends on what that host is in charge of doing, and what the workload is. If that host is in charge of delivering time sensitive information for public consumption, or even private consumption then you absolutely do not want your load average to be bigger than 1 per CPU core. If that host is in charge of chugging through a queue of work which you do not care when it finishes then you could probably tolerate a load average of >1 per core. Note: If your load average is >1 for 24 hours a day then your host will never catch up (thank you captain obvious!).

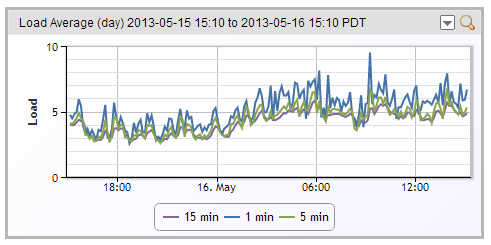

Above is an example of a server that has 4 cores, and is straddling the line of load, but because it’s just processing jobs that are not time sensitive, it’s ok.

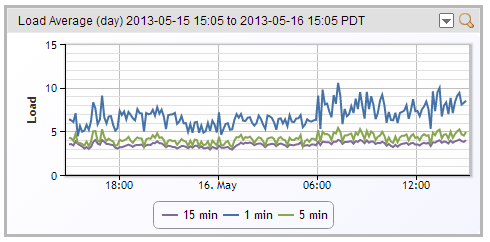

Above is an example of a server with 16 cores that is processing information that is time sensitive and is well within the load capacity of 16.

Know what your server is purposed for and monitor responsibly.

Subscribe to our blog

Get articles like this delivered straight to your inbox